In the next series of posts we'll first talk about how RTP and SDP messages work, and some implementation details in two popular multimedia toolkits: FFmpeg and GStreamer. Afterwards, we'll see how to leverage this knowledge to build a reliable RTP connection between Kurento and mediasoup:

- RTP (I): Intro to RTP and SDP

- RTP (II): Streaming with FFmpeg

FFmpeg and GStreamer are two of the tools that come to mind for most developers while thinking about writing a quick script that is capable of operating with RTP. Both of these tools offer libraries meant to be used from your programming language of choice, but they also provide handy command-line tools that become an "easier" alternative for those who don't want to write their own programs from scratch.

Of course, "easier" has to be quoted in the previous paragraph, because the fact is that using these command line tools still requires a good amount of knowledge about what the tool is doing, why, and how. It is important to have a firm grasp on some basic concepts about RTP, to understand what is going on behind the curtains, so we are able to fix issues when these happen.

The Real-time Transport Protocol -- RTP

Our first topic is the Real-time Transport Protocol (RTP), the most popular method to send or receive real-time networked multimedia streams.

RTP has surely become a de-facto standard given that it's the mandated transport used by WebRTC, and also lots of tools use RTP for video or audio transmission between endpoints. The basic principle behind RTP is very simple: an RTP session comprises a set of participants (we'll also call them peers) communicating with RTP, to either send or receive audio or video.

Participants wanting to send will partition the media into different chunks of data called RTP packets, then send those over UDP to the receivers.

Participants expecting to receive data will open a UDP port where they listen for incoming RTP packets. Those packets have to be collected and re-assembled, to obtain the media that was originally transmitted by the sender.

However, as the saying goes, the devil is in the details. Let's review several basic concepts and extensions over this initial principle.

RTP packets

RFC 3550 defines what exactly an RTP packet is: "A data packet consisting of the fixed RTP header, a possibly empty list of contributing sources, and the payload data". This is the actual shape of such packet:

(Bitmap) 0 1 2 3 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |V=2|P|X| CC |M| PT | sequence number | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | timestamp | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | synchronization source (SSRC) identifier | +=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+ | contributing source (CSRC) identifiers | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | payload ... | | +-------------------------------+ | | RTP padding | RTP pad count | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

All data before the payload is called the RTP Header, and contains some information needed by participants in the RTP session.

The RTP standard definition is more than 15 years old, and it shows; the RTP packet header contains some fields that are defined as mandatory, but nowadays are not really used any more by current RTP and WebRTC implementations. Here we'll only talk about those header fields that are most useful; for a full description of all fields defined by the RTP standard, refer to the RFC document at RFC 3550.

PT (Payload Type)

Identifies the format of the RTP payload. In essence, a Payload Type is an integer number that maps to a previously defined encoding, including clock rate, codec type, codec settings, number of channels (in the case of audio), etc. All this information is needed by the receiver in order to decode the stream.

Originally, the standard provided some predefined Payload Types for commonly used encoding formats at the time. For example, the Payload Type 34 corresponds to the H.263 video codec. More predefined values can be found in RFC 3551:

PT encoding media type clock rate

name (Hz)

_____________________________________________

24 unassigned V

25 CelB V 90,000

26 JPEG V 90,000

27 unassigned V

28 nv V 90,000

29 unassigned V

30 unassigned V

31 H261 V 90,000

32 MPV V 90,000

33 MP2T AV 90,000

34 H263 V 90,000

35-71 unassigned ?

72-76 reserved N/A N/A

77-95 unassigned ?

96-127 dynamic ?

dyn H263-1998 V 90,000

Nowadays, instead of using a table of predefined numbers, applications can define their own Payload Types on the fly, and share them ad-hoc between senders and receivers of the RTP streams. These Payload Types are called dynamic, and are always chosen to be any number in the range [96-127].

An example: in a typical WebRTC session, Chrome might decide that the Payload Type 96 will correspond to the video codec VP8, PT 98 will be VP9, and PT 102 will be H.264. The receiver, after getting an RTP packet and inspecting the Payload Type field, will be able to know what decoder should be used to successfully handle the media.

sequence number

This starts as an arbitrary random number, which then increments by one for each RTP data packet sent. Receivers can use these numbers to detect packet loss and to sort packets in case they are received out of order.

timestamp

Again, this starts being an arbitrary random number, and then grows monotonically at the speed given by the media clock rate (defined by the Payload Type). Represents the instant of time when the media source was packetized into the RTP packet; the protocol doesn't use absolute timestamp values, but it uses differences between timestamps to calculate elapsed time between packets, which allows synchronization of multiple media streams (think lip sync between video and audio tracks), and also to calculate network latency and jitter.

SSRC (Synchronization Source)

Another random number, it identifies the media track (e.g. one single video, or audio) that is being transmitted. Every individual media will have its own identifier, in the form of a unique SSRC shared during the RTP session. Receivers are able to easily identify to which media each RTP packet belongs by looking at the SSRC field in the packet header.

RTP Control Protocol -- RTCP

RTP is typically transmitted over UDP, where none of the TCP reliability features are present. UDP favors skipping all the safety mechanisms, giving the maximum emphasis to reduced latency, even if that means having to deal with packet loss and other typical irregular behavior of networks, such as jitter.

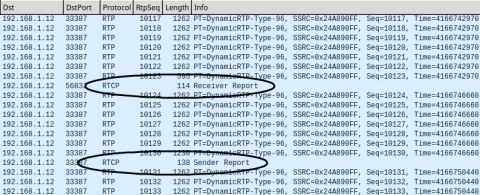

As a means to provide some feedback to each participant in an RTP session, all of them should send Real-time Transport Control Protocol (RTCP) packets, containing some basic statistics about their part of the conversation. Peers that act as senders will send both RTP and RTCP Sender Reports, while peers that act as receivers will receive RTP and send RTCP Receiver Reports.

These RTCP packets are sent much less frequently than the RTP packets they accompany; typically we would see one RTCP packet per second, while RTP packets are sent at a much faster rate.

An RTCP packet contains very useful information about the stream:

- SSRCs used by each media.

- CNAME, an identifier that can be used to group several medias together.

- Different timestamps, packet counts, and jitter estimations, from senders and receivers. These statistics can then be used by each peer to detect bad conditions such as packet loss.

Additionally, there is a set of optionally enabled extensions to the base RTCP format, that have been developed over time. These are called RTCP Feedback (RTCP-FB) messages, and can be transmitted from the receiver as long as their use has been previously agreed upon by all participants:

- Google REMB is part of an algorithm that aims to adapt the sender video bitrate in order to avoid issues caused by network congestion. See Kurento | Congestion Control for a quick summary on this topic.

- NACK is used by the receiver of a stream to inform the sender about packet loss. Upon receiving an RTCP NACK packet, the sender knows that it should re-send some of the RTP packets that were already sent before.

- NACK PLI (Picture Loss Indication), a way that the receiver has to tell the sender about the loss of some part of video data. Upon receiving this message, the sender should assume that the receiver will not be able to decode further intermediate frames, and a new refresh frame should be sent instead. More information in RFC 4585.

- CCM FIR (Full Intra Request), another method that the receiver has to let the sender know when a new full video frame is needed. FIR is very similar to PLI, but it's a lot more specific in requesting a full frame (also known as keyframe). More information in RFC 5104.

These extensions are most commonly found in WebRTC implementations, helping with packet loss and other network shenanigans. However, nothing prevents that a plain RTP endpoint implements any or all of these methods, like done by Kurento's RtpEndpoint.

These features might or might not be supported by both peers in an RTP session, and must be explicitly negotiated and enabled. This is typically done with the SDP negotiation, that we'll cover next.

Session Description Protocol -- SDP

Before two peers start communicating with RTP, they need to agree on some parameters that will define the streaming itself: from network related considerations (such as what are their IP addresses and ports), to media-related stuff (like what codecs will be used for the audio or video encoding).

To achieve this we use SDP messages, which are plain text files that follow a loosely formatted structure, containing all the details needed to describe the streaming parameters. In this section, we'll give an overview of SDP messages, their format and their meaning, biased towards the concept of SDP Offer/Answer Model as used by WebRTC.

SDP messages

An SDP message, when generated by a participant in an RTP session, serves as an explicit description of the media that should be sent to it, from other remote peers. It's important to insist on this detail: in general (as like with everything, there are exceptions), the SDP information refers to what an RTP participant expects to receive.

Another way to put this is that an SDP message is a request for remote senders to send their data in the format specified by the message.

RFC 4566 contains the full description of all basic SDP fields. Other RFC documents were written to extend this basic format, mainly by adding new attributes (a= lines) that can be used in the media-level section of the SDP files. We'll introduce some of them as needed for our examples.

Example 1: Simplest SDP

This is an example of the most basic SDP message one can find:

v=0 o=- 0 0 IN IP4 127.0.0.1 s=- c=IN IP4 127.0.0.1 t=0 0 m=video 5004 RTP/AVP 96 a=rtpmap:96 VP8/90000

It gets divided into two main sections:

First 5 lines are what is called the "session-level description":

v=0 o=- 0 0 IN IP4 127.0.0.1 s=- c=IN IP4 127.0.0.1 t=0 0

It describes things such as the peer's host IP address, time bases, and summary description. Most of these values are optional, so they can be set to zero (0) or empty strings with a dash (-).

Next comes the "media-level description", consisting of a line that starts with m= and any number of additional attributes (a=) afterwards:

m=video 5004 RTP/AVP 96 a=rtpmap:96 VP8/90000

In this example, the media-level description reads as follows:

- There is a single video track.

5004is the local port where other peers should send RTP packets.5005is the local port where other peers should send RTCP packets. In absence of explicit mention, the RTCP port is always calculated as the RTP port + 1.RTP/AVPis the profile definition that applies to this media-level description. In this case it is RTP/AVP, as defined in RFC 3551.96is the expected Payload Type in the incoming RTP packets.VP8/90000is the expected video codec and clock rate of the payload data, contained in the incoming RTP packets.

Example 2: Annotated SDP

SDP does not allow comments, but if it did, we could see one like this:

# Protocol version; always 0 v=0 # Originator and session identifier o=jdoe 2890844526 2890842807 IN IP4 224.2.17.12 # Session description s=SDP Example # Connection information (network type and host address, like in 'o=') c=IN IP4 224.2.17.12 # NTP timestamps for start and end of the session; can be 0 t=2873397496 2873404696 # First media: a video stream with these parameters: # * The RTP port is 5004 # * The RTCP port is 5005 (implicitly by using RTP+1) # * Adheres to the "RTP Profile for Audio and Video" (RTP/AVP) # * Payload Type can be 96 or 97 m=video 5004 RTP/AVP 96 97 # Payload Type 96 encoding corresponds to VP8 codec a=rtpmap:96 VP8/90000 # Payload Type 97 encoding corresponds to H.264 codec a=rtpmap:97 H264/90000

In this example we can see how the media could be ambiguously defined to use multiple Payload Types (PT). PT is the number that identifies one set of encoding properties in the RTP packet header, including codec, codec settings, and other formats.

Example 3: Multiple media & Payload Types

One single SDP message can be used to define multiple media tracks, just by stacking media-level descriptions one after each other.

Also, several different encodings for each media can be defined, by listing more Payload Types (in order of preference), and what codecs should be sent for each one. This allows that each peer is able to choose between a range of codecs, according to their preferences.

For example:

v=0 o=- 0 0 IN IP4 127.0.0.1 s=- c=IN IP4 127.0.0.1 t=0 0 m=audio 5006 RTP/AVP 111 a=rtpmap:111 opus/48000/2 a=fmtp:111 minptime=10;useinbandfec=1 m=video 5004 RTP/AVP 96 98 102 a=rtcp:54321 a=rtpmap:96 VP8/90000 a=rtpmap:98 VP9/90000 a=rtpmap:102 H264/90000 a=fmtp:102 profile-level-id=42001f

In this example, there are two media tracks defined. First, an audio track:

m=audio 5006 RTP/AVP 111 a=rtpmap:111 opus/48000/2 a=fmtp:111 minptime=10;useinbandfec=1

where:

- Incoming RTP packets are expected at port

5006. - Incoming RTCP packets are expected at port

5006 + 1 = 5007. - Media-level description adheres to basic RTP/AVP profile.

- Payload Type should be

111, of which:- Codec is Opus, with 48000 Hz and 2 channels (stereo).

- Advanced Opus codec parameters

minptimeanduseinbandfecshould be as indicated in thefmtp("format parameters") line.

Secondly, a video track:

m=video 5004 RTP/AVP 96 98 102 a=rtcp:54321 a=rtpmap:96 VP8/90000 a=rtpmap:98 VP9/90000 a=rtpmap:102 H264/90000 a=fmtp:102 profile-level-id=42001f

where:

- Incoming RTP packets are expected at port

5004. - Incoming RTCP packets are expected at port

54321. - Media-level description adheres to basic RTP/AVP profile.

- Payload Type should be one of

96,98, or102; this participant prefers96, but remotes could choose to send any of the three PTs offered here.- PT

96is expected to be video encoded with the VP8 codec. - PT

98is VP9. - PT

102is H.264. For this, the advanced H.264 codec parameterprofile-level-idshould be configured as42001f.

- PT

SDP common settings

There are some SDP attributes that are not mandatory, but in practice are almost always used, especially for WebRTC communications. Here we'll have a look at them.

a=rtcp

The initial SDP examples had already explained the rule of how the RTCP port is implicitly defined to be the RTP port + 1. This means that when the SDP message tells other RTP participants that their data should be sent to port N, they should deduce that their RTCP Sender Reports should be sent to port N + 1.

The attribute a=rtcp (defined in RFC 3605) makes this information explicit. It allows an RTP participant to state that its listening port for remote RTCP packets is the one indicated by this attribute.

For example:

m=audio 49170 RTP/AVP 0 a=rtcp:53020

A remote peer wanting to send media to this participant would have to send the RTP packets to port 49170, and RTCP Sender Reports to port 53020.

a=rtcp-mux

While a=rtcp allowed to be explicit about what local port is being listened for incoming RTCP packets, a=rtcp-mux tells other peers that RTP and RTCP ports are the same.

This feature (defined in RFC 5761) is called RTP and RTCP multiplexing, and allows remote peers to send both types of packets to the same port: the one specified in the media-level description.

For example:

m=audio 49170 RTP/AVP 0 a=rtcp-mux

Of course, participants that add this attribute to their SDP messages must be able to demultiplex packets according to their type, as port-based classification will not be available given that all RTP and RTCP packets will arrive at the same port.

Symmetric RTP

Symmetric RTP / RTCP (defined in RFC 4961) refers to the fact that the same local UDP port is used for both inbound and outbound packets. This is not an SDP attribute, however it's an operating mode frequently seen in RTP implementations; some of them even require usage of this feature (such as mediasoup).

Normally, RTP participants only have to configure input port numbers when asking the Operating System to open their UDP sockets for listening. On the other hand, output ports are typically left to be chosen randomly by the O.S., because in the common model of IP communications the source port is not that important; only the destination port is.

An endpoint that claims to be compatible with Symmetric RTP, however, would explicitly bind its output socket to the same local port that is used for receiving incoming data. The net effect of this is that the same UDP port is used for both receiving and sending RTP packets.

Also note that using Symmetric RTP does not necessarily imply RTP and RTCP multiplexing, as this section's image might suggest; it's perfectly possible to have different ports for RTP and RTCP, but with Symmetric RTP they would be used for both sending and receiving RTP or RTCP, respectively.

a=rtcp-rsize

When using the RTP Profile for RTCP-Based Feedback (RTP/AVPF, RFC 4585), it is possible to enable extra RTCP Feedback messages such as NACK, PLI, and FIR. These convey information about the reception of a stream, and the sender should be able to receive and react to these messages as fast as possible.

Making these RTCP-FB messages fit in a smaller size would mean it is possible to send more of them, with minimal delay, and with smaller probability of being dropped by the network. For these reasons, Reduced-Size RTCP (defined in RFC 5506) changes certain rules about the standard way of constructing RTCP messages, and allows to skip sending some parts that otherwise would be mandatory to include.

For example:

m=audio 49170 RTP/AVPF 0 a=rtcp-rsize

Note that this attribute can only be applied when using the RTP/AVPF profile.

An RTP participant that includes this attribute in the SDP message is telling other peers that they can send Reduced-Size RTCP Feedback messages.

a=sendonly, a=recvonly, a=sendrecv

It is possible for a participant to inform others about its intention to either send media, receive it, or do both. These attributes are defined in RFC 4566, and their use in SDP negotiations (that we'll talk about in the next section) is explained in RFC 3264.

Lets see examples for all three cases:

Receive-only

Example:

m=audio 49170 RTP/AVP 0 a=recvonly

With this SDP media-level description, the RTP participant is indicating that it only wants to receive RTP media, and this media should be sent by remote peers to the port 49170 (while their RTCP packets should be sent to the port 49170 + 1 = 49171).

Note that a=recvonly means that a participant doesn't want to send RTP media, but it will still send RTCP Receiver Reports to remote peers.

Send-only

Example:

m=audio 49170 RTP/AVP 0 a=sendonly

The opposite case from above: this participant does not expect any incoming media, and it only intends to send data to other peers.

Even though incoming data is not expected at port 49170, this number still has to be specified in the SDP media-level description because it is a mandatory field. Also, in this example remote peers would need it anyway, to know that their RTCP Receiver Reports must be sent to this participant's port 49171 (RTP + 1).

Send and receive

Example:

m=audio 49170 RTP/AVP 0 a=sendrecv

Finally, with this attribute the RTP participant is indicating that it will be receiving media from remote peers, and also sending media to them.

a=sendrecv is the default value that is assumed when no direction attribute is present, so it's possible to just omit it for participants that intend to do both things. However it is good practice to always include it, so the intended direction of the stream is more explicit.

SDP Offer/Answer Model

We now have a clear picture of what an SDP message is, and some of the most relevant attributes that can be used for RTP communications. It's time to talk about the method that is used to actually negotiate ports, encodings, and settings, between different peers wanting to initiate an RTP session: the SDP Offer/Answer Model (RFC 3264).

This model describes a mechanism by which two RTP participants agree to a common subset of SDP codecs and settings, so each one is able to "talk" with the other. One participant offers the other a description of the desired session from their perspective, and the other participant answers with the desired session from their perspective.

The SDP Offer/Answer Model is used by protocols like SIP and WebRTC.

Operation

The SDP Offer/Answer negotiation begins when one RTP participant, called the offerer, sends an initial SDP message to another peer. This SDP message contains a description of all the media tracks and features that the offerer wants to receive, and it is called the SDP Offer.

The receiver of the SDP Offer, called the answerer, should now parse the offer and find a subset of tracks and features that are acceptable. These will then be used to build a new SDP message, called the SDP Answer, which gets sent back to the first peer.

RFC 3264 establishes the rules that should be followed in order to build an SDP Answer from a given SDP Offer; this is a quick summary of the process:

- The answerer should place its own IP address in the

o=andc=lines. - All media-level descriptions should be copied from the SDP Offer to the SDP Answer.

- If the answerer doesn't want to use any of the given medias, it should mark them as rejected by setting the RTP port to

0. In this case, all media attributes are irrelevant and can be dropped. - If the answerer doesn't want to use any of the provided Payload Types, these can be removed from the media description.

- If the answerer doesn't accept or doesn't understand any attribute (

a=lines), they should be ignored and removed from the media description. - For the remaining media descriptions that the answerer accepts to use, the RTP port should be set to a new local port in the answerer machine. Same for the RTCP port, if

a=rtcpis in use. - If the offer contained

a=recvonly(i.e. the offerer only wants to receive media), then the answerer should replace it witha=sendonly(i.e. the answerer will only send media), and vice versa.

After an answer has been built, it is sent back to the offerer. The offerer has then a complete description of what media tracks, encodings, and other features have been accepted by the answerer. Any missing fields in the media-level description(s) indicate features that the answerer doesn't want to use or doesn't support at all, so the offerer is expected to avoid using those.

Both peers have then enough information to start transmitting or receiving media, and the SDP Offer/Answer negotiation is finished at this point.

Operation example

Let's assume this SDP Offer:

v=0 o=- 0 0 IN IP4 127.0.0.1 s=Sample SDP Offer c=IN IP4 127.0.0.1 t=0 0 m=audio 5006 RTP/AVP 111 a=rtpmap:111 opus/48000/2 a=sendonly m=video 5004 RTP/AVP 96 98 102 a=rtcp:5554 a=rtpmap:96 VP8/90000 a=rtpmap:98 VP9/90000 a=rtpmap:102 H264/90000 a=sendonly m=video 9004 RTP/AVP 96 a=rtpmap:96 VP8/90000 a=sendonly

Note that the media-level descriptions have been separated to improve the readability of the file, but a real SDP message would not have blank lines like that.

This SDP Offer describes an RTP participant that wants to send 3 simultaneous media streams: 1 audio and 2 videos (it might be, for example, the user's microphone input + the user's webcam + a desktop capture). Given the rules that have been already explained, it should be possible for the reader to understand the formats that are being proposed by the offerer.

Now let's suppose that the answerer is a device which is only able to process 1 audio and 1 video stream at the same time. Also, the only available video decoder is for H.264, and it does not support the a=rtcp attribute. This might be the SDP Answer that gets generated:

v=0 o=- 3787580028 3787580028 IN IP4 172.17.0.1 s=Sample SDP Answer c=IN IP4 127.0.0.1 t=0 0 m=audio 28668 RTP/AVP 111 a=rtpmap:111 opus/48000/2 a=recvonly m=video 35585 RTP/AVP 102 a=rtpmap:102 H264/90000 a=recvonly m=video 0 RTP/AVP 96

Observe that:

- The answerer fills its own timing values in the

o=line. - The RTP port for audio is specified, so the offerer will know where to send its RTP audio (and the corresponding RTCP packets).

- The first video description has been filtered and unsupported Payload Types were removed. Only remaining one is

102, corresponding to the H.264 codec. - The RTP port for the first video description has also been updated with the value for the answerer. Also because the answerer didn't support standalone RTCP, the

a=rtcpattribute has been removed. The offerer should notice this, and send its RTCP Sender Reports to the port35586(RTP + 1). - The answerer only supports one simultaneous video, so the second one is rejected by setting RTP port =

0, and all its attributes are dropped. - All direction attributes have been updated to reflect the answerer's point of view.

This SDP Answer would then be sent to the offerer, which would now have to parse it all to discover which medias, Payload Types, and attributes were accepted by the other peer, and which ones were rejected or dropped. Media will then start flowing as defined for the session.

Signaling

The Offer/Answer Model assumes the existence of a higher layer protocol which is capable of exchanging SDP messages for the purposes of session establishment between participants in the RTP session.

This is commonly called the signaling of the session, and it's not specified by any standard or RFC: each application must choose an adequate signaling method that allows to send SDP messages back and forth between participants. This might be any variety of methods, like: just copy&pasting the messages by hand; sharing a common database where the SDP messages are exchanged; direct TCP socket connections such as WebSocket; network message brokers such as RabbitMQ, MQTT, or Redis; etc.

Bonus track: Adding an 'S' to the mix

Building upon the concepts that we've seen for RTP and SDP, we can now introduce two more names that are frequently seen in the world of streaming: RTSP and SRTP. These are very different are not to be confused, despite their similar names!

Real Time Streaming Protocol -- RTSP

RTSP (1.0: RFC 2326; 2.0: RFC 7826) joins together the concept of RTP and SDP, bringing them to the next step with the addition of stream discovery and playback controls.

We could (sort of) describe RTSP as a protocol similar to HTTP: just like an HTTP server offers a method-based interface with names such as GET, POST, DELETE, CONNECT, and more, in RTSP there is a server that provides a control plane with textual verbs such as DESCRIBE, SETUP, ANNOUNCE, TEARDOWN, PLAY, PAUSE, RECORD, etc.

Clients connect to the RTSP server, and through the mentioned verbs they acquire an SDP description of the media streams that are available from the server. Once this is done, the client has now a full SDP description of all streams, so it can use RTP to connect to them.

RTSP is useful because it includes the "signaling" functionality that was mentioned earlier. With plain RTP and SDP, it is the application that somehow has to transmit SDP messages between RTP peers. However with RTSP the client establishes a TCP connection with the server (just like it happens with HTTP), and this channel is used to transmit all commands and SDP descriptions.

Secure Real-time Transport Protocol -- SRTP

The S in SRTP stands for Secure, which provides the missing feature in protocols described so far. RFC 3711 defines a method by which all RTP and RTCP packets can be transmitted in a way that keeps the audio or video payload from being captured and decoded by prying eyes. While plain RTP presents a mechanism to packetize and transmit media, it does not get into the matter of security; any attacker might be able to join an ongoing RTP session and snoop on the content being transmitted.

SRTP achieves its objectives by providing several protections:

- Encrypts the media payload of all RTP packets. Note though that only the payload is protected, and RTP headers are unprotected. This allows for media routers and other tools to inspect the information present on the headers, maybe for distribution or statistics aggregation, while still protecting the actual media content.

- Asserts that all RTP and RTCP packets are authenticated and come from the source where they purport to be coming.

- Ensures the integrity of the entire RTP and RTCP packets, i.e. protecting against arbitrary modifications of the packet contents.

- Prevents replay attacks, which are a specific kind of network attack where the same packet is duplicated and re-transmitted ("replayed") multiple times by a malicious participant, in an attempt to extract information about the cipher used to protect the packets. In essence, replay attacks are a form of "man-in-the-middle" attacks.

An important consequence of the encryption that SRTP provides is that it's still possible to inspect the network packets (e.g. by using Wireshark) and see all RTP header information. This proves invaluable when the need arises for debugging a failing stream!

This is the visualization of an RTP packet that has been protected with SRTP:

(Bitmap)

0 1 2 3

0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ \

|V=2|P|X| CC |M| PT | sequence number | |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |

| timestamp | |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |

| synchronization source (SSRC) identifier | |

+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+=+ |

| contributing source (CSRC) identifiers | |-+

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | |

| RTP extension (OPTIONAL) | | |

/ +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | |

| | payload ... | | |

+-| | +-------------------------------+ | |

| | | | RTP padding | RTP pad count | | |

| \ +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ / |

| ~ SRTP MKI (OPTIONAL) ~ |

| +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |

| : authentication tag (RECOMMENDED) : |

| +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |

| |

+---- Encrypted Portion Authenticated Portion ------+

For a full description of all fields, refer to the RFC documents at RFC 3550 (RTP) and RFC 3711 (SRTP).

References

RTP:

- RFC 3550 | RTP: A Transport Protocol for Real-Time Applications

- RFC 3551 | RTP Profile for Audio and Video (RTP/AVP)

- RFC 4585 | RTP Profile for RTCP-Based Feedback (RTP/AVPF)

- RFC 4961 | Symmetric RTP and RTCP

- RFC 5104 | Codec Control Messages in RTP/AVPF

SDP:

- RFC 4566 | SDP: Session Description Protocol

- RFC 3605 | RTCP attribute in SDP

- RFC 3264 | SDP Offer/Answer Model

- RFC 4317 | SDP Offer/Answer Examples

- RFC 5506 | Reduced-Size RTCP

- RFC 5761 | Multiplexing RTP Data and Control Packets on a Single Port

RTSP:

SRTP:

- RFC 3711 | Secure Real-time Transport Protocol (SRTP) and Profile (RTP/SAVP)

Comments and questions

Did you find a bug in this post? Have any questions? Feel free to comment in Kurento Mailing List / Forums, at https://groups.google.com/forum/#!forum/kurento

Thanks for reading!

Juan Navarro -- Kurento maintainer & developer

j1elo @ Twitter / GitHub

Comments

Very nice write up, small

Thanks! I have slightly

yes, very good wirte up.

Great explanation. Covering

Very important read, thank

Add new comment