In this series of posts we are talking about RTP and SDP:

- RTP (I): Intro to RTP and SDP

- RTP (II): Streaming with FFmpeg

While RTP is a pretty well established standard, not all extensions and operation modes are necessarily supported by all implementations. We'll be having a look at how these are handled by some of the best known open-source multimedia tools, FFmpeg and GStreamer: what are the characteristics and shortcomings of their RTP implementations, bugs, things to keep in mind, etc.

Using these tools can be somewhat confusing or complex for the uninitiated, though. The matter of fact is that media processing in and of itself is a complex topic, and tools like FFmpeg or GStreamer couldn't do much to smoothen this without at the same time compromising on their feature set. You still need to know what is a codec, a parser, a filter, etc., how and why are you using all these together, and be able to work your way through technical documentation and asking the right questions, in order to end up using these tools in an effective way.

All the examples shown here will be formatted for a Linux terminal. The command syntax is generally the same on all platforms, but you might need to adapt some platform-specific details such as continuation lines (\ on Linux, ^ on Windows), or access to environment variables ($NAME or ${NAME} on Linux, %NAME% on Windows).

Transcoding: friend or foe?

Before heading directly into practical examples of RTP streaming, we have to talk about transcoding, a concept that ends up appearing sooner or later around the topic of media streaming.

Transcoding is the act of transforming one media encoding format directly to another. This is typically done for compatibility purposes, such as when a media source provides a format that the intended target is not able to process; an in-between adaptation step is required:

- Decode media from its originally encoded state into raw, uncompressed information.

- Encode the raw data back, using a different codec that is supported by our intended consumer.

Let's see an example:

Here, we have a Matroska file video.mkv, containing video that was encoded with Google's VP8 and audio encoded with the OPUS codec. However, our consumer is only able to process MP4 files with video encoded with H.264 and audio encoded with MP3. A transcoding step is needed to convert between these: video and audio need to be re-encoded with correct codecs, and the results have to be stored in the proper container format.

Note that when streaming video and/or audio with RTP, containers are not an issue because the inner media tracks themselves are transmitted. The video and audio tracks are extracted ("demultiplexed", in the multimedia jargon) from the container and then streamed as-is, without first reintroducing (or "multiplexing") them into any container.

Pros and cons of transcoding

Modern codecs are incredibly sophisticated and able to obtain huge savings in file size without introducing much quality loss due to compression, but doing so comes at a high cost in terms of computational resources. While at first sight it seems that transcoding might be always a good thing, because it makes sure that our media will be compatible with the limitations of our consumers, the fact is that the transcoding step will rapidly saturate the CPU as soon as several streams need to be processed at the same time.

It is our job as developers or software architects to decide whether transcoding is required and really necessary, and it's always a good idea to minimize the number of cases where it is enabled.

FFmpeg basics

FFmpeg describes itself as A complete, cross-platform solution to record, convert and stream audio and video. It is a library that can be used as dependency for our software projects, providing a lot of powerful functionality for media processing; but it also offers a command-line program which can be used to access its power without having to write any code.

As of this writing, the latest version is FFmpeg 4.2.2. On Linux, some distros might still provide old versions of FFmpeg 2.x or 3.x, so if that's your case, the recommendation is to download an up-to-date static build from John Van Sickle's website. Similarly, you should always try to use the latest version for Windows or Mac systems.

FFmpeg command line parameters are sensitive to the order in which they are given: those applying to an input must come before the input URL, and those applying to the output must come after the input but before the output parameters:

ffmpeg \

[global_options] \

{ [input_options] -i input_url } \

{ [output_options] output_url }

Our examples will generally follow this structure.

RTP streaming command walkthrough

Let's start by showing the simplest example of how to read a local video file and stream it with RTP:

ffmpeg \

-re \

-i video.mp4 \

-an \

-c:v copy \

-f rtp \

-sdp_file video.sdp \

"rtp://192.168.1.109:5004"

Now this is what is achieved by each parameter:

-re: Treats the input as a live source, reading at the speed mandated by the input itself. By default,ffmpegattempts to read the input(s) as fast as possible, so in a powerful computer it will probably churn through the whole file in a matter of milliseconds. However, here we want to live-stream the media; think of this option as slowing down the reading of the input(s), to the speed given by their native frame rate.-i video.mp4: Input file.-an: Indicates that the output won't include any audio.-c:v copy: Sets the output to contain a direct copy of the input video. This means that transcoding is disabled.-f rtp: Sets the output format; in this case we are generating an RTP stream, so the format isrtp. If we were generating a file, this parameter could be omitted because the format would be guessed from the output file's extension.-sdp_file video.sdp: Writes an auto-generated SDP to a file. This file can then be loaded into other media player in order to play the RTP stream back.rtp://192.168.1.109:5004: Finally, the last parameter is the output URL, where the RTP protocol, destination IP address, and destination RTP port are specified.

There is a notable takeaway from this example: only one media track can be streamed at the same time. If we try to add an audio track to our streaming, by replacing -an ("no audio") with -c:a copy ("copy audio"), we get the following error:

[rtp @ 0x6fec300] Only one stream supported in the RTP muxer

Also, note that the generated SDP file is a literal description of what is being sent from the tool, not of what is being expected by the tool (i.e. this is not an SDP Offer/Answer as we saw in our previous introduction to SDP: Session Description Protocol). We can use this SDP file as input to any media playback software that supports receiving RTP streams, and it should work fine.

In our example, this is the SDP file that gets generated:

v=0 o=- 0 0 IN IP4 127.0.0.1 s=No Name c=IN IP4 192.168.1.109 t=0 0 a=tool:libavformat 58.29.100 m=video 5004 RTP/AVP 96 a=rtpmap:96 H264/90000 a=fmtp:96 packetization-mode=1; profile-level-id=42C01F

BUG:

Actually, with the tested FFmpeg version, the SDP file starts with an invalid line: SDP:. This is a bogus line and we must manually remove it before using it with any other media player. (Bug report)

Workaround: fix the SDP file with this command that deletes the unwanted line (or delete it by hand):

sed -i '/^SDP:/d' video.sdp

UPDATE: This bug is fixed in FFmpeg ≥ 4.3.

Additional features

Looking back at our RTP introduction article, there are some additional features that we can add to our FFmpeg-based RTP server:

RTCP port

It is possible to specify an RTCP port that is not the default RTP + 1; this will make FFmpeg to send its RTCP Sender Reports to the indicated destination port. We just have to add the parameter rtcpport to the output URL. For example:

ffmpeg -re -i video.mp4 -an -c:v copy -f rtp -sdp_file video.sdp \

"rtp://192.168.1.109:5004?rtcpport=54321"

BUG:

FFmpeg lacks an important feature in that no media attribute a=rtcp:54321 is added to the resulting SDP file. The settings applies correctly, in the sense that RTCP Sender Reports are actually sent to the indicated destination port, but the SDP file won't include the required attribute. And if the SDP file doesn't include this attribute, the RTP consumer will not know about the RTCP port that should be used! (Bug report)

Symmetric RTP

It's also possible to explicitly bound to any desired output ports for outbound RTP and/or RTCP packets, thus achieving the behavior expected for Symmetric RTP. We can do this with the URL parameters localrtpport and localrtcpport. For example:

ffmpeg -re -i video.mp4 -an -c:v copy -f rtp -sdp_file video.sdp \

"rtp://192.168.1.109:5004?rtcpport=5005&localrtpport=5004&localrtcpport=5005"

This would allow a receiving application to inspect incoming RTP and RTCP packets, observe the source ports where they come from, and use those ports as destination for its RTCP Receiver Reports.

RTP and RTCP multiplexing

FFmpeg doesn't seem to support RTP mux at all; after a cursory search in its source code, no mention of the SDP attribute a=rtcp-mux was found, so it is safe to assume that this feature is not included.

Timestamps correction

Sometimes it happens that our source video file sort of works, but it was created with wrong timestamps (PTS and DTS), or even worse, timestamp information is completely missing. In order to avoid this kind of issues, we can add the genpts input flag, that ensures correct timestamps in our RTP flow:

ffmpeg \

-re \

-fflags +genpts \

-i video.mp4 \

-an \

-c:v copy \

-f rtp \

-sdp_file video.sdp \

"rtp://192.168.1.109:5004"

Transcoding the input

If you want to add transcoding to the mix, just change the codec definition parameter -c:v copy to the desired one. For example, in our example the source video is encoded with H.264; we might want to have it transcoded to VP8 and would do so with this command:

ffmpeg \

-re \

-fflags +genpts \

-i video.mp4 \

-an \

-c:v vp8 \

-f rtp \

-sdp_file video.sdp \

"rtp://192.168.1.109:5004"

and the resulting SDP file is this one:

v=0 o=- 0 0 IN IP4 127.0.0.1 s=No Name c=IN IP4 192.168.1.109 t=0 0 a=tool:libavformat 58.29.100 m=video 5004 RTP/AVP 96 b=AS:200 a=rtpmap:96 VP8/90000

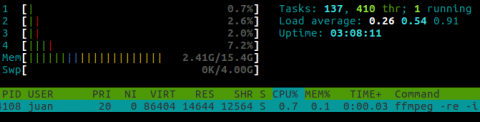

However, keep an eye on the CPU usage that is introduced by the transcoding step! Here is a typical example of what you could see, a capture of htop showing CPU usage with non-transcoding stream (-c:v copy):

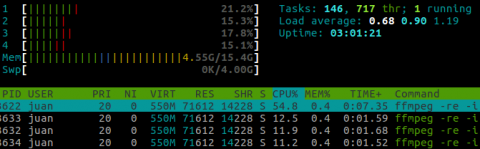

And the multi-threaded CPU encoding done by the VP8 encoder during transcoding-enabled streaming (-c:v vp8):

Note how my CPU went from 0.7% in the first case, to somewhere between 10% and 55% in the second case.

Stream playback: Receiving RTP

Now that FFmpeg is sending RTP, we can start a receiver application that gets the stream and shows the video, by using any media player that is compatible with SDP files. This proves that our RTP streaming is working fine.

We'll show how you can open the RTP stream with three tools: FFmpeg, GStreamer, and VLC. In general, all tools are unable to directly open the RTP stream (by providing them with an rtp:// URL), and instead an SDP file must be provided. This happens because using dynamic Payload Types forces all formatting and encoding information to be transmitted out-of-band in the SDP message, instead of being able to rely on the pre-defined set of default codecs. Read more on this in our previous explanation of Payload Types, in RTP packets.

FFmpeg playback

First, let's see how FFmpeg requires using an SDP file as input, by trying with a direct RTP URL:

ffplay \

-protocol_whitelist rtp,udp \

-i "rtp://127.0.0.1:5004"

This fails with the following error:

[rtp @ 0x7ff5f8000bc0] Unable to receive RTP payload type 96 without an SDP file describing it

To use a Payload Type in the dynamic range (>= 96), FFmpeg absolutely requires using an SDP file as input. If we provide one, FFmpeg will have all necessary information to receive and decode the stream:

ffplay \

-protocol_whitelist file,rtp,udp \

-i video.sdp

Note that ffplay won't send RTCP Receiver Reports or any other kind of RTCP Feedback to the sender. Seems that the FFmpeg project started working on proper RTCP support a while ago, and some RTCP Feedback types such as NACK and PLI were added around 2012-2013. However, this was only added to be used from the RTSP protocol, and not plain RTP:

BUG:

Just like ffmpeg does not add the attribute a=rtcp to the SDP file, ffplay does not read this attribute, even if we add it by hand. It will blindly assume that RTCP packets will be received at the default port (RTP + 1). FFmpeg doesn't play well with itself! thus, the usage of rtcpport URL parameter is not viable if all we use is FFmpeg as both sender and receiver of the RTP stream. (Bug report)

GStreamer playback

First of all, we'll need to install GStreamer; in Debian/Ubuntu systems, these commands will do the job:

sudo apt-get update && sudo apt-get install --yes \

gstreamer1.0-plugins-{good,bad,ugly} \

gstreamer1.0-{libav,tools}

Just like what happens with FFmpeg, GStreamer also requires that an SDP file is provided as input. In fact, the all-in-one player component in GStreamer is not compatible at all with rtp:// URIs:

gst-launch-1.0 playbin uri="rtp://127.0.0.1:5004"

Our attempt will be rejected with following error:

ERROR: from element /GstURIDecodeBin:uridecodebin0: No URI handler implemented for "rtp".

However, using an SDP input works fine:

gst-launch-1.0 playbin uri="sdp://$PWD/video.sdp"

Note that the shell replaces $PWD with the full path to the current directory. This way we provide GStreamer with an absolute path to the SDP file.

Also, it's worth noting that these commands have been tested with GStreamer 1.16.1, as provided by Ubuntu 19.10 ("Eoan"). This version of GStreamer seems to have a bug in the SDP handler, which ends up printing a worrisome message:

** (gst-launch-1.0:4661): CRITICAL **: 20:06:33.944: gst_sdp_media_get_connection: assertion 'idx < media->connections->len' failed

Ironically, the message is not critical and the playback works fine.

Another note is that the uri property in playbin must use an sdp:// scheme for this to work, instead of the more intuitive file://. This is not mentioned at all in the playbin docs, and the sdp:// scheme is only introduced when reading specifically about the sdpsrc element, so watch out!

For those already initiated in the GStreamer framework and how its pipelines work, the previous command is in essence equivalent to this other one, assuming that our source file had H.264-encoded video:

gst-launch-1.0 \

filesrc location=video.sdp \

! sdpdemux timeout=0 ! queue \

! rtph264depay ! h264parse ! avdec_h264 \

! videoconvert ! autovideosink

VLC playback

Again, VLC won't allow us to stream directly from a URI. Trying to open a Network Stream with rtp://@:5004 will raise these messages:

A description in SDP format is required to receive the RTP stream. Note that rtp:// URIs cannot work with dynamic RTP payload format (96). [00007f9678000e90] rtp demux error: unspecified payload format (type 96) [00007f9678000e90] rtp demux: A valid SDP is needed to parse this RTP stream.

On the other hand, directly opening our SDP file will work fine:

vlc video.sdp

Summary of FFmpeg features

- Sends RTCP Sender Report from the RTP sender.

- Doesn't allow sending multiple media tracks.

- Doesn't send RTCP Receiver Report, or any other kind of RTCP Feedback, from the RTP receiver.

Bugs found:

- Generates invalid SDP files (Bug report).

- Setting the RTCP port with the URL parameter

rtcpportdoesn't add the corresponding media-level attributea=rtcpto the generated SDP file (Bug report). - Playing back from an SDP file ignores the media-level attribute

a=rtcp(Bug report).

References

Comments and questions

Did you find a bug in this post? Have any questions? Feel free to comment in Kurento Mailing List / Forums, at https://groups.google.com/forum/#!forum/kurento

Thanks for reading!

Juan Navarro -- Kurento maintainer & developer

j1elo @ Twitter / GitHub

Comments

Thank you *so much* for this

You are welcome! :) I'm happy

Hi Juan,

Great read!

Add new comment